This post is a series of quick thoughts related to logistic regression. It starts with this article on moving between logit and probability scales.

***

Logistic regression models the probability of a yes/no event occurring. It gives you more information than a model that simply tries to classify yeses and nos. I advised a client to move from an uninterpretable classification method to logistic regression and they were so excited about the result that they filed a patent on it.

It’s too late to patent logistic regression, but they filed a patent on the application of logistic regression to their domain. I don’t know whether the patent was ever granted.

***

The article cited above is entitled “Rough approximations to move between logit and probability scales.” Here is a paragraph from the article giving its motivation.

When working in this space, it’s helpful to have some approximations to move between the logit and probability scales. (As an analogy, it is helpful to know that for a normal distribution, the interval ± 2 standard deviations around the mean covers about 95% of the distribution, while ± 3 standard deviations covers about 99%.)

Here are half the results from the post; the other half follow by symmetry.

|-------+-------|

| prob | logit |

|-------+-------|

| 0.500 | 0 |

| 0.750 | 1 |

| 0.900 | 2 |

| 0.995 | 3 |

| 0.999 | 7 |

|-------+-------|

Zero on the logit scale corresponds exactly to a probability of 0.5. The other values are approximate.

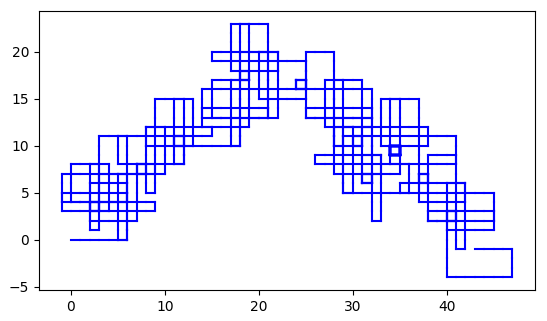

When I say the rest of the table follows by symmetry, I’m alluding to the fact that

logit(1 − p) = − logit(p).

So, for example, because logit(0.999) ≈ 7, logit(0.001) ≈ −7.

***

The post reminded me of the decibel scale. As I wrote in this post, “It’s a curious and convenient fact that many decibel values are close to integers.”

- 3 dB ≈ 2

- 6 dB ≈ 4

- 7 dB ≈ 5

- 9 dB ≈ 8

I was curious whether the logit / probability approximations were as accurate as these decibel approximations. Alas, they are not. They are rough approximations, as advertised in the title, but still useful.

***

The post also reminded me of a comment by Andrew Gelman and Jennifer Hill on why natural logs are natural for regression.